A SEMINAR REPORT

PRESENTED BY

######## ####### #####

CS/##/###

SUBMITTED TO THE

DEPARTMENT OF COMPUTER SCIENCE, FACULTY OF SCIENCE

MADONNA UNIVERSITY ELELE CAMPUS, RIVERS STATE

IN PARTIAL FULFILLMENT OF THE REQUIREMENT FOR THE AWARD OF BACHELOR OF SCIENCE (B.Sc.) DEGREE IN COMPUTER SCIENCE

SUPERVISED BY

DECLARATION

This is to certify that this seminar work titled: Unicode standard was fully carried out by registration number CS/##/### in partial fulfillment of the requirement for the award of Bachelor of Science in Computer Science. This seminar research was done by the following stated above, and has not been submitted elsewhere for the award of a certification diploma or degree.

……………………………… ………………………………..

######## ####### ##### (CS/##/###) DATE

(Student’s name)

……………………………….. ………………………………

######## ####### #####

(Supervisor)

………………………………… ……………………………..

######## ####### ##### DATE (H.O.D)

ACKNOWLEDGEMENT

I would like to thank the almighty God for giving me the courage to do this topic and to my supervisor ######## ####### ##### for his support and guidance throughout this research. I would also like to extend my heartfelt thanks to my parents ######## ####### #####. I would also like to thank ######## ####### ##### and to the HOD Computer science department MRS ######## ####### ##### and all the lectures who has one way or the other help me on the course of this research fellow colleagues and to all those who supported and encouraged me.

TABLE OF CONTENTS

DECLARATION

ACKNOWLEDGEMENT

ABSTRACT

CHAPTER 1

INTRODUCTION

1.1 BACKGROUND OF THE STUDY

1.2 PROBLEM STATEMENT

1.3 OBJECTIVE OF THE STUDY

1.4 SCOPE OF THE REPORT

1.5 SIGNIFICANCE OF THE STUDY

1.6 LIMITATIONS

1.7 GLOSSARY

1.8 ORGANIZATION OF THE CHAPTER

1.8.1 Chapter 2 Literature Review

1.8.2 Chapter 3 Finding/Case Study

1.8.3 Chapter 4 Conclusion

CHAPTER 2

LITERATURE REVIEW

HISTORY OF UNICODE STANDARD

HISTORICAL BACKGROUND

ORIGIN AND DEVELOPMENT

CHAPTER 3

FINDINGS

3.1 COVERAGE OF UNICODE STANDARD

3.1.1 Languages covered by Unicode standard

3.1.2 Design Basis

3.1.3 Text Handling

3.1.4 The Unicode Standard and ISO/IEC 10646

3.1.5 The Unicode Consortium

3.1.6 ASCII TEXT AND UNICODE TEXT

3.2 CHARACTER SEMANTICS

3.3 WHY UNICODE STANDARD WAS DESIGNED

3.4 WHEN TO USE UNICODE-MODE APPLICABLE

3.5 ADVANTAGES OF UNICODE STANDARD

3.6 DISADVANTAGES OF UNICODE STANDARD

3.7 BENEFITS OF UNICODE STANDARD

3.8 THE IMPORTANCE OF UNICODE STANDARD

3.9 PROBLEM OF UNICODE STANDARD

3.10 AVAILABILITY OF UNICODE STANDARD

3.11 DIFFERENCE BETWEEN ASCII AND UNICODE STANDARD KEYBOARD

CHAPTER 4

CONCLUSION

4.1 SUMMARY

4.2 RECOMMENDATION

REFERENCE

ABSTRACT

The Unicode Standard is the universal character encoding scheme for writing characters and text. It defines a consistent way of encoding multilingual text that enables the exchange of text data internationally and creates the foundation for global software. The main objective of this research is that Unicode is a computing industry standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems and also for the classical forms of many languages which the unified Han subset contains 27,484 ideographic characters defined by national and industry standards of China, Japan, Korea, Taiwan, Vietnam, and Singapore. Unicode Standard goes far beyond ASCII's limited ability to encode only the upper and lowercase letters A through Z but Unicode have the capacity to encode all characters used for the written languages of the world and close more than 1 million characters can be encoded therefore making those in the listed countries stated above to make use of their different letter symbols to communicate with each other. Unicode standard provide basis of software that must function all around the world.

CHAPTER 1

INTRODUCTION

1.1 BACKGROUND OF THE STUDY

The Unicode Standard is the universal character encoding scheme for written characters and text. It defines a consistent way of encoding multilingual text that enables the exchange of text data internationally and creates the foundation for global software. As the default encoding of HTML and XML, the Unicode Standard provides a sound underpinning for the World Wide Web and new methods of business in a networked world. Required in new Internet protocols and implemented in all modern operating systems and computer languages such as Java, Unicode is the basis of software that must function all around the world.

With Unicode, the information technology industry gains data stability instead of proliferating character sets; greater global interoperability and data interchange; and simplified software and reduced development costs.

While modeled on the ASCII character set, the Unicode Standard goes far beyond ASCII's limited ability to encode only the upper- and lowercase letters A through Z. It provides the capacity to encode all characters used for the written languages of the world--more than 1 million characters can be encoded. No escape sequence or control code is required to specify any character in any language. The Unicode character encoding treats alphabetic characters, ideographic characters, and symbols equivalently, which means they can be used in any mixture and with equal facility

The Unicode Standard specifies a numeric value and a name for each of its characters. In this respect, it is similar to other character encoding standards from ASCII onward. In addition to character codes and names, other information is crucial to ensure legible text: a character's case, directionality, and alphabetic properties must be well defined. The Unicode Standard defines this and other semantic information, and includes application data such as case mapping tables and mappings to the repertoires of international, national, and industry character sets. The Unicode Consortium provides this additional information to ensure consistency in the implementation and interchange of Unicode data.

Unicode provides for two encoding forms: a default 16-bit form and a byte-oriented form called UTF-8 that has been designed for ease of use with existing ASCII-based systems. The Unicode Standard, Version 3.0, is code-for-code identical with International Standard ISO/IEC 10646. Any implementation that is conformant to Unicode is therefore conformant to ISO/IEC 10646.

Using a 16-bit encoding means that code values are available for more than 65,000 characters. While this number is sufficient for coding the characters used in the major languages of the world, the Unicode Standard and ISO/IEC 10646 provide the UTF-16 extension mechanism (called surrogates in the Unicode Standard), which allows for the encoding of as many as 1 million additional characters without any use of escape codes. This capacity is sufficient for all known character encoding Unicode covers all the characters for all the writing systems of the world, modern and ancient. It also includes technical symbols, punctuations, and many other characters used in writing text.

1.2 PROBLEM STATEMENT

Unicode is a computing industry standard allowing computers to consistently represent and manipulate text expressed in most of the world's writing systems. Older fonts were designed before Unicode.

They placed special characters in non-standard "slots" in the encoding. You can tell this in InDesign's Glyphs panel, because when you pause over a special character, the tooltip description (based on Unicode) won't match what you're seeing.

1.3 OBJECTIVE OF THE STUDY

The main objective of this research is that Unicode is a computing industry standard for the consistent encoding, representation, and handling of text expressed in most of the world's writing systems.

1.4 SCOPE OF THE REPORT

This work will cover the definition of Unicode standard, what it is used for and the advantages and disadvantages of using the standard. It will also list some languages covered by Unicode standard. How the languages assign or arrive at different coding system will not be part of this work.

1.5 SIGNIFICANCE OF THE STUDY

The essence of this topic is to enable my listeners to know that Unicode standard is based on inserting different languages and symbols attached to your keyboard of your computer system, which the symbols are different letters in our keyboard like A-Z that is each country have their own symbols for English letter.

1.6 LIMITATIONS

The main problem of his topic is that countries that their main language is not English language was having problem using the keyboard for typing and sending messages since their own type of letters were not in use example China , Cameroon , Russia etc.

1.7 GLOSSARY

· UTF-Unicode Transformation Format

· UTC-Unicode Technical Committee

· IETF- Internet Engineering Task Force

· UCS-Universal Character Set

1.8 ORGANIZATION OF THE CHAPTER

1.8.1 Chapter 2 Literature Review

This section is talking about some of the related literatures or work done by other researchers pertaining to this topic and how this literatures relate to my report. The study of Unicode standard have proven to be important to the research community.

1.8.2 Chapter 3 Finding/Case Study

My final chapter three is on my research findings. Where I discussed the results that I discovered in relation to Unicode standard. I discussed about the various parts of Unicode standard and how they affect and helps the society.

1.8.3 Chapter 4 Conclusion

This section contains the conclusion, summary and the recommendation of the technical report on Unicode standards

CHAPTER 2

LITERATURE REVIEW

HISTORY OF UNICODE STANDARD

The Unicode standard document is the original Unicode manifesto, authored by Joe Becker. It developed the basic principles for the Unicode design and briefly outlined the history and status of the "Unicode Proposal" as of 1988. Some of its content was reworked for inclusion in the early Unicode pre-publication drafts.

The August, 1990 document was a very rough working draft for the standard. The editors and the Unicode Working Group (UWG) the predecessor of the UTC―used it to help lay out the anticipated content and structure of the standard. It contained no code charts or block descriptions. This draft preceded the final decision about the eventual name of the Unicode Consortium—hence the odd name of the consortium on the title page. The Unicode Consortium was not officially incorporated until January 3, 1991.

The October, 1990 draft was fairly widely distributed. It contained very abbreviated introductory text, initial drafts of the code charts, the first draft of the character names index, the first draft of multi-column Unihan charts, and various mapping tables. It was referred to at the time as "Unicode 0.9".

The December, 1990 drafts were even more widely distributed, including to many international reviewers. They were used to elicit public feedback on the draft standard. They put the participating SC2 national bodies on notice that the Unicode Consortium was serious about developing and publishing its standard. The first volume contained a more extended introduction, a full draft of the non-Han code charts, including the first version of the character names list. It also contained the first drafts of the block descriptions. The second volume contained the multi-column Unihan charts and the draft of the Han block introduction. At the time these draft volumes were referred to collectively as the "Final Review Draft".

The May 7, 1991 draft was complete content, intended for final Unicode Consortium membership review and approval and non-Han part of the draft standard prior to copy editing with the eventual publisher, Addison-Wesley.

HISTORICAL BACKGROUND

The origins of Unicode date to 1987, when Joe Becker from Xerox and Lee Collins and Mark Davis from Apple started investigating the practicalities of creating a universal character set. In August 1988, Joe Becker published a draft proposal for an "international/multilingual text character encoding system, tentatively called Unicode". He explained that "[t]he name 'Unicode' is intended to suggest a unique, unified, universal encoding.

Unicode is intended to address the need for a workable, reliable world text encoding. Unicode could be roughly described as "wide-body ASCII" that has been stretched to 16 bits to encompass the characters of all the world's living languages. In a properly engineered design, 16 bits per character are more than sufficient for this purpose.

His original 16-bit design was based on the assumption that only those scripts and characters in modern use would need to be encoded

Unicode gives higher priority to ensuring utility for the future than to preserving past antiquities. Unicode aims in the first instance at the characters published in modern text (e.g. in the union of all newspapers and magazines printed in the world in 1988), whose number is undoubtedly far below 214 = 16,384. Beyond those modern-use characters, all others may be defined to be obsolete or rare; these are better candidates for private-use registration than for congesting the public list of generally useful Unicode.

In early 1989,Unicode working group expanded to include Ken Whistler and Mike Kernaghan of Metaphor, Karen Smith-Yoshimura and Joan Aliprand of RLG, and Glenn Wright of Sun Microsystems, and in 1990 Michel Suignard and Asmus Freytag from Microsoft and Rick McGowan of NeXT joined the group. By the end of 1990, most of the work on mapping existing character encoding standards had been completed, and a final review draft of Unicode was ready.

The Unicode Consortium was incorporated on January 3, 1991, in California, and in October 1991, the first volume of the Unicode standard was published. The second volume, covering Han ideographs, was published in June 1992.

In 1996, a surrogate character mechanism was implemented in Unicode 2.0, so that Unicode was no longer restricted to 16 bits. This increased the Unicode code space to over a million code points, which allowed for the encoding of many historic scripts (e.g., Egyptian) and thousands of rarely used or obsolete characters that had not been anticipated as needing encoding. Among the characters not originally intended for Unicode are rarely used Kanji or Chinese characters, many of which are part of personal and place names, making them rarely used, but much more essential than envisioned in the original architecture of Unicode

ORIGIN AND DEVELOPMENT

Unicode has the explicit aim of transcending the limitations of traditional character encodings, such as those defined by the ISO 8859 standard, which find wide usage in various countries of the world but remain largely incompatible with each other. Many traditional character encodings share a common problem in that they allow bilingual computer processing (usually using Latin characters and the local script), but not multilingual computer processing (computer processing of arbitrary scripts mixed with each other).

Unicode, in intent, encodes the underlying characters—graphemes and grapheme-like units—rather than the variant glyphs (renderings) for such characters. In the case of Chinese characters, this sometimes leads to controversies over distinguishing the underlying character from its variant glyphs.

In text processing, Unicode takes the role of providing a unique code point—a number, not a glyph—for each character. In other words, Unicode represents a character in an abstract way and leaves the visual rendering (size, shape, font, or style) to other software, such as a web browser or word processor. This simple aim becomes complicated, however, because of concessions made by Unicode's designers in the hope of encouraging a more rapid adoption of Unicode.

The first 256 code points were made identical to the content of ISO-8859-1 so as to make it trivial to convert existing western text. Many essentially identical characters were encoded multiple times at different code points to preserve distinctions used by legacy encodings and therefore, allow conversion from those encodings to Unicode (and back) without losing any information. For example, the "full width forms" section of code points encompasses a full Latin alphabet that is separate from the main Latin alphabet section. In Chinese, Japanese, and Korean (CJK) fonts, these characters are rendered at the same width as CJK ideographs, rather than at half the width.

CHAPTER 3

FINDINGS

3.1 COVERAGE OF UNICODE STANDARD

3.1.1 Languages covered by Unicode standard

The Unicode Standard, Version 3.0, contains 49,194 characters from the world's scripts. These characters are more than sufficient not only for modern communication, but also for the classical forms of many languages. Scripts include the European alphabetic scripts, Middle Eastern right-to-left scripts, and scripts of Asia. The unified Han subset contains 27,484 ideographic characters defined by national and industry standards of China, Japan, Korea, Taiwan, Vietnam, and Singapore. In addition, the Unicode Standard includes punctuation marks, mathematical symbols, technical symbols, geometric shapes, and dingbats.

3.1.2 Design Basis

The primary goal of the development effort for the Unicode Standard was to remedy two serious problems common to most multilingual computer programs. The first problem was the overloading of the font mechanism when encoding characters while the second major problem was the use of multiple, inconsistent character codes because of conflicting national and industry character standards. In Western European software environments, for example, one often finds confusion between the Windows Latin 1 code and ISO/IEC 8859-1. In software for East Asian ideographs, the same set of bytes used for ASCII may also be used as the second byte of a double-byte character. In these situations, software must be able to distinguish between ASCII and double-byte characters.

Figure 1-2. Universal, Efficient, and Unambiguous

3.1.3 Text Handling

Computer text handling involves processing and encoding. When a word processor user types in text via a keyboard, the computer's system software receives a message that the user pressed a key combination for "T", which it encodes as U+0054. The word processor stores the number in memory and also passes it on to the display software responsible for putting the character on the screen. This display software, which may be a windows manager or part of the word processor itself, then uses the number as an index to find an image of a "T", which it draws on the monitor screen. The process continues as the user types in more characters.

The Unicode Standard directly addresses only the encoding and semantics of text and not any other actions performed on the text. In the preceding scenario, the word processor might check the typist's input after it has been encoded to look for misspelled words, and then highlight any errors it finds. Alternatively, the word processor might insert line breaks when it counts a certain number of characters entered since the last line break. An important principle of the Unicode Standard is that the standard does not specify how to carry out these processes as long as the character encoding and decoding is performed properly and the character semantics are maintained.

3.1.4 The Unicode Standard and ISO/IEC 10646

The Unicode Standard is fully compatible with the international standard ISO/IEC 10646-1:2000, Information Technology--Universal Multiple-Octet Coded Character Set (UCS)--Part 1: Architecture and Basic Multilingual Plane, which is also known as the Universal Character Set (UCS). During 1991, the Unicode Consortium and the International Organization for Standardization (ISO) recognized that a single, universal character code was highly desirable. A formal convergence of the two standards was negotiated, and their repertoires were merged into a single character encoding in January 1992. Since then, close cooperation and formal liaison between the committees have ensured that all additions to either standard are coordinated and kept synchronized, so that the two standards maintain exactly the same character repertoire and encoding.

3.1.5 The Unicode Consortium

The Unicode Consortium was incorporated in January 1991, under the name Unicode, Inc., to promote the Unicode Standard as an international encoding system for information interchange, to aid in its implementation, and to maintain quality control over future revisions.

The Unicode Technical Committee (UTC) is the working group within the Consortium responsible for the creation, maintenance, and quality of the Unicode Standard. The UTC controls all technical input to the standard and makes associated content decisions. Full Members of the Consortium vote on UTC decisions. Associate and Specialist Members and Officers of the Unicode Consortium are nonvoting UTC participants. Other attendees may participate in UTC discussions at the discretion of the Chair, as the intent of the UTC is to act as an open forum for the free exchange of technical ideas.

3.1.6 ASCII TEXT AND UNICODE TEXT

It provides the capacity to encode all characters used for the written languages of the world--more than 1 million characters can be encoded. No escape sequence or control code is required to specify any character in any language. The Unicode character encoding treats alphabetic characters, ideographic characters, and symbols equivalently, which means they can be used in any mixture and with equal facility.

Figure 1-1. Wide ASCII

3.2 CHARACTER SEMANTICS

The Unicode standard includes an extensive database that specifies a large number of characters properties, including:

· Name

· Type (e.g., letter, digit, punctuation mark)

· Decomposition

· Case and case mappings (for cased letters)

· Numeric value (for digits and numerals)

· Combining class (for combining characters)

· Directionality

· Line-breaking behavior

· Cursive joining behavior

· For Chinese characters, mappings to various other standards and many other properties

3.3 WHY UNICODE STANDARD WAS DESIGNED

· Universal-The repertoire must be large enough to encompass all characters that are likely to be used in general text interchange, including those in major international, national, and industry character sets.

· Efficient-Plain text is simple to parse: software does not have to maintain state or look for special escape sequences, and character synchronization from any point in a character stream is quick and unambiguous.

· Uniform. A fixed character code allows for efficient sorting, searching, display, and editing of text.

· Unambiguous. Any given 16-bit value always represents the same character.

3.4 WHEN TO USE UNICODE-MODE APPLICABLE

Consider working with Unicode-mode applications only in the following situations:

- You need to enable users with different languages to view, in their own languages and character sets, information from a common database.

For example, using alias tables in Japanese and German, users in Japan and Germany can view information about a common product set in their own languages.

- You need to handle artifact names longer than non-Unicode-mode applications support.

For example, application and database names need to include more than eight characters, or you are working with a multi byte character set, and you need to handle more characters in artifact names.

3.5 ADVANTAGES OF UNICODE STANDARD

- Unicode is a 16-bit system which can support many more characters than ASCII.

- The first 128 characters are the same as the ASCII system making it compatable.

- There are 6400 characters set aside for the user or software.

- There are still characters which have not been defined yet, future-proofing the system.

- It impact it has on the performance of the international economy

- This enables corporations to manage the high demands of international markets by processing different writing systems at the same time.

· Character based encoding.

· Unicode values are governed by characters (vowels and consonants).

· Can be ported on any platform and any OS.

· Can be ported on hand held and mobile devices

· Different scripts have different code page.

· All Asian languages are supported along with all other languages.

· Allows multiple languages in the same data.

3.6 DISADVANTAGES OF UNICODE STANDARD

- Unicode files are very large because it takes 2 bytes to store each character

3.7 BENEFITS OF UNICODE STANDARD

Support for Unicode provides many benefits to application developers, including:

- Global source and binary.

- Support for mixed-script computing environments.

- Improved cross-platform data interoperability through a common code set.

- Space-efficient encoding scheme for data storage.

- Reduced time-to-market for localized products.

- Expanded market access.

- For a translated, multi byte base implementation, you have experienced a “round-trip” problem, where two different bit values can map to the same character, which can occur in communications between multi byte operating systems and application programs.

3.8 THE IMPORTANCE OF UNICODE STANDARD

· Unicode enables a single software product or a single website to be designed for multiple platforms, languages and countries (no need for re-engineering) which can lead to a significant reduction in cost over the use of legacy character sets.

· Unicode data can be used through many different systems without data corruption.

· Unicode represents a single encoding scheme for all languages and characters.

· Unicode is a common point in the conversion between other character encoding schemes.

· Unicode is the preferred encoding scheme used by XML-based tools and applications.

3.9 PROBLEM OF UNICODE STANDARD

· Overloading of the font mechanism when encoding characters fonts have often been indiscriminately mapped to the same set of byte.

· The use of multiple, inconsistent character codes because of conflicting national and industry character standards

3.10 AVAILABILITY OF UNICODE STANDARD

· UNICODE is not vendor specific

· Backward compatible

· Major database, OS, browser players support some form UNICODE encoding

· Data Migration services will be provided free for e-governance developers

· Currently office documents such as .doc/.docx, .xls/xlsx, .txt can be converted to UNICODE

· Soon database migration tools will also be made available.

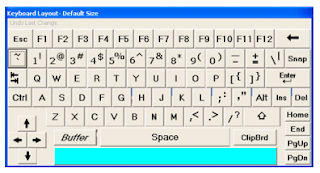

3.11 DIFFERENCE BETWEEN ASCII AND UNICODE STANDARD KEYBOARD

ASCII REPRESENTATION

UNICODE STANDARD REPRESENTATION

CHAPTER 4

CONCLUSION

The objective of this report is talking about the study of Unicode standard. This ssstudy brings more insights on some of the most problems in Unicode standard which has two serious problems common to most multilingual computer programs.The main purpose of the study is to carry out these processes as long as the character encoding and decoding is performed properly and the character semantics are maintained. I believe that Unicode standard have helped countries that don’t understand English language to enable them make use of their systems to type and send messages to one another.

4.1 SUMMARY

Unicode standard is emphasizing about how universal character encoding scheme is written as character and text. It enable users with different language to view their own language and character set from a common database.it also enable single product or single website which is designed for multiple platform , languages and countries and it prefers encoding schemes use by XML-based tool and application.

Unicode standard is a superset of all character in widespread use today, which provides the capacity to encode all characters used for the written language of the world, which more than 1 million characters can be encoded and it also provides the capacity to encode characters used in writing languages in the world and treats alphabetic characters, ideographic characters and symbols equivalently which can be the mixture with equal facility.

Unicode standard contains 49,194 character from the world script which the unified subset contains 27,484 ideographic characters defined by the natural and industry standard of china, japan, Singapore etc.

4.2 RECOMMENDATION

Unicode standard should be made available for developed countries that find it difficult to understand the main English language examples which are the Chinese, Ethiopia, Japanese, Korean etc. This countries making use of Unicode standard will enable them to make use of desktops/laptops and even mobile phone also, so that they can find it easy to send messages to each other.

Unicode standard should also increase their versions ie adding more characters so that it can be more user friendly to the public.

REFERENCE

Armbruster, Carl Hubert (1908). Initia Amharica: An Introduction to Spoken Amharic. Cambridge, Cambridge University Press.

Addison-Wesley Professional. (2003). The Unicode Consortium, The Unicode Standard, Version 4.0

Addison-Wesley Professional. (2006). The Unicode Consortium, The Unicode Standard, Version 5.0, Fifth Edition,.

Bergsträsser, Gotthelf (1995) Introduction to the Semitic Languages: Text Specimens and Grammatical Sketches.

Bringhurst, Robert (1996) The Elements of Typographic Style.

Campbell, George L (1990) Compendium of the World’s Languages.

Clews, John (1988) Language Automation Worldwide: The Development of

Character Set Standards.

Comrie, Bernard (1981). The World’s Major Languages. Oxford, Oxford University

Press, 1987.

Comrie, Bernard (1981). The Languages of the Soviet Union. Cambridge, Cambridge University Press.

James Felici. (2002). Adobe Press; 1st edition, The Complete Manual of Typography

Jukka K. Korpela.(2006) . O'Reilly; 1st edition, Unicode Explained

Julie D. Allen. (2011) .The Unicode Consortium Mountain View, The Unicode Standard, Version 6.0, 120-160.

Tony Graham. (2000). M&T books, Unicode: A Primer

Wesley, A. (2000). The unicode standard version 3.0. The Unicode Consortium, 150-300.

Wesley Longman. (2000). The Unicode Consortium, Addison,The Unicode Standard, Version 3.0

0 comments:

Post a Comment